|

Hi, My name is Dong Won. My advisors and friends usually call me “Don" :) I’m a PhD Student at MIT working on the foundations of multimodal social agents, where I focus on developing machine learning methods and evaluation for social intelligence. I am extremely grateful to be co-advised by Professor Cynthia Breazeal and Dr. Hae Won Park in the Personal Robots Group at the Media Lab and Professor Louis-Philippe Morency at the Language Technologies Institute at CMU. Prior to MIT, I graduated with a M.S. in Machine Learning and a B.S. in Statistics and Machine Learning from Carnegie Mellon University. My research is generously supported by the Schwarzman College of Computing Amazon AI Research Innovation Fellowship . I am also a 2025–2026 E14 VC Fund Fellow at MIT. Google Scholar / Linkedin / Twitter / Github / |

|

|

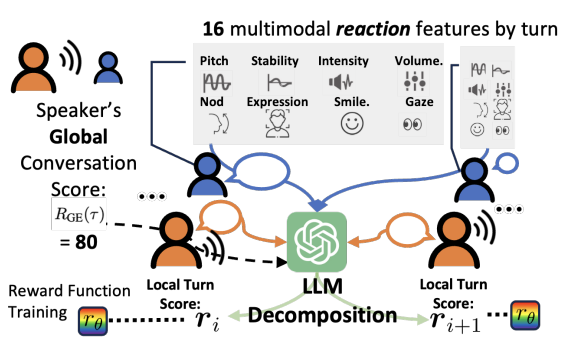

09/2025: Honored to be named a Amazon AI Research Innovation Fellow , supporting my doctoral research on multimodal social intelligence and foundation models for embodied AI. 09/2025: Selected as a 2025–2026 E14 VC Fund Fellow at MIT, supporting venture activities with the E14 Fund, an early-stage VC firm affiliated with MIT. 09/2025: Our paper on Aligning Dialogue Agents with Global Feedback via Large Language Model Multimodal Reward Decomposition is accepted at EMNLP 2025 (Findings). We align dialogue agents with global feedback through 17 multimodal features via LLM-based reward decomposition, then using these rewards to learn adapt LLMs from the rich combination of facial, prosodic, gaze, and affective cues humans naturally provide. 06/2025: Completed the MIT Research Mentoring Certificate Program, focused on effective and inclusive mentoring practices. 04/2025: Gave a Rising Stars Student Spotlight talk at the MIT Media Lab Bi-Annual Members’ Event on Embodied AI Agents in Real-World Social Interactions. 09/2024: Our paper on Global Reward to Local Rewards: Multimodal-Guided Decomposition for Improving Dialogue Agents is accepted at EMNLP 2024. This is a new work that extends alignment techniques such as RLHF for a multimodal signals using a single final score instead of intermediate annotations. 06/2024: I've started an research internship at Microsoft Research in NYC working on Human-Oriented AI! 08/2023: I'll be TA'ing and mentoring students for MIT MAS.630 Affective Computing and Ethics taught by Rosalind Picard at the MIT Media Lab, where I'll be giving lectures on advancements in Machine Learning for Affective Computing and Social Intelligence in LLMs. We encourage interested MIT/Harvard students to sign up for our class to learn about the future of Socio-Emotional AI! 07/2023: Our paper on Lecture Presentations Multimodal Dataset: Towards Understanding Multimodality in Educational Videos is accepted at ICCV 2023! 07/2023: Our paper on HIINT: Historical, Intra-and Inter-personal Dynamics Modeling with Cross-person Memory Transformer is accepted at ICMI 2023! 04/2023: Our paper on Multipar-T: Multiparty-Transformer for Capturing Contingent Behaviors in Group Conversations is accepted at IJCAI 2023! 03/2023: Our proposal for the 1st Workshop on Social and Affective Intelligence (SAI) has been accepted at ACII 2023! Please consider submitting your work! 03/2022: Our paper on Low-resource Adaptation for Personalized Co-Speech Gesture Generation is accepted at CVPR 2022. 07/2021: Our paper on Crossmodal clustered contrastive learning: Grounding of spoken language to gestures is accepted to GENEA Workshop @ ICMI 2021. 05/2021: We are organizing the First Workshop on Crossmodal Social Animation at ICCV 2021. 09/2020: Our paper on No Gestures Left Behind: Learning Relationships between Spoken Language and Freeform Gestures is accepted at Findings at EMNLP 2020. 07/2020: Our paper on Style Transfer for Co-Speech Gesture Animation is accepted at ECCV 2020 |

|

|

|

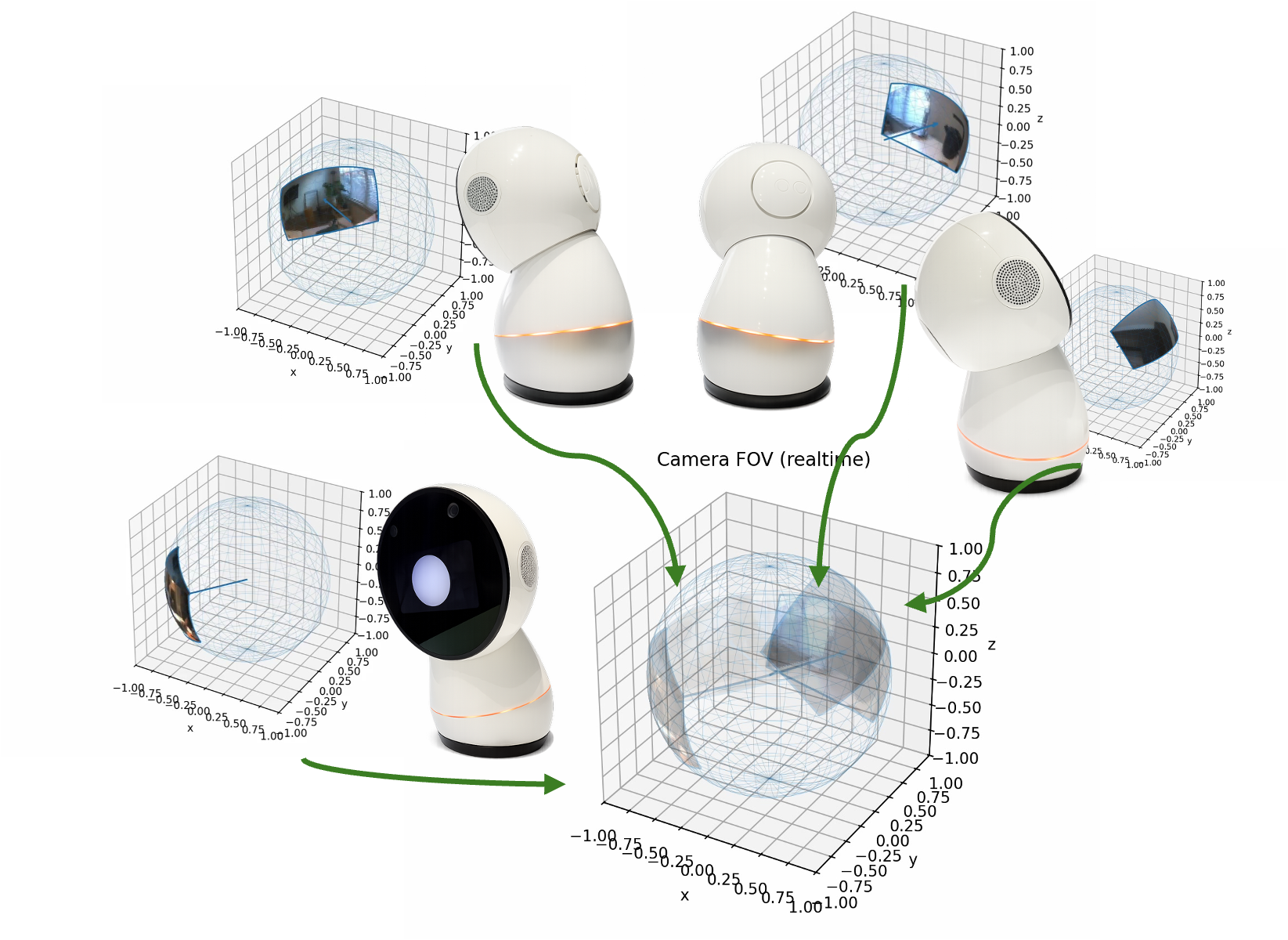

Dong Won Lee, Sarah Gillet, Louis-Philippe Morency, Cynthia Breazeal, Hae Won Park In Submission , 2025 paper, website We present a minimal system recipe for situated embodied conversation that pairs a real-time multimodal language model with a small set of tools for attention and active perception, enabling robots to interleave dialogue with “what to look at, when to look, and what to say” under tight latency constraints. |

|

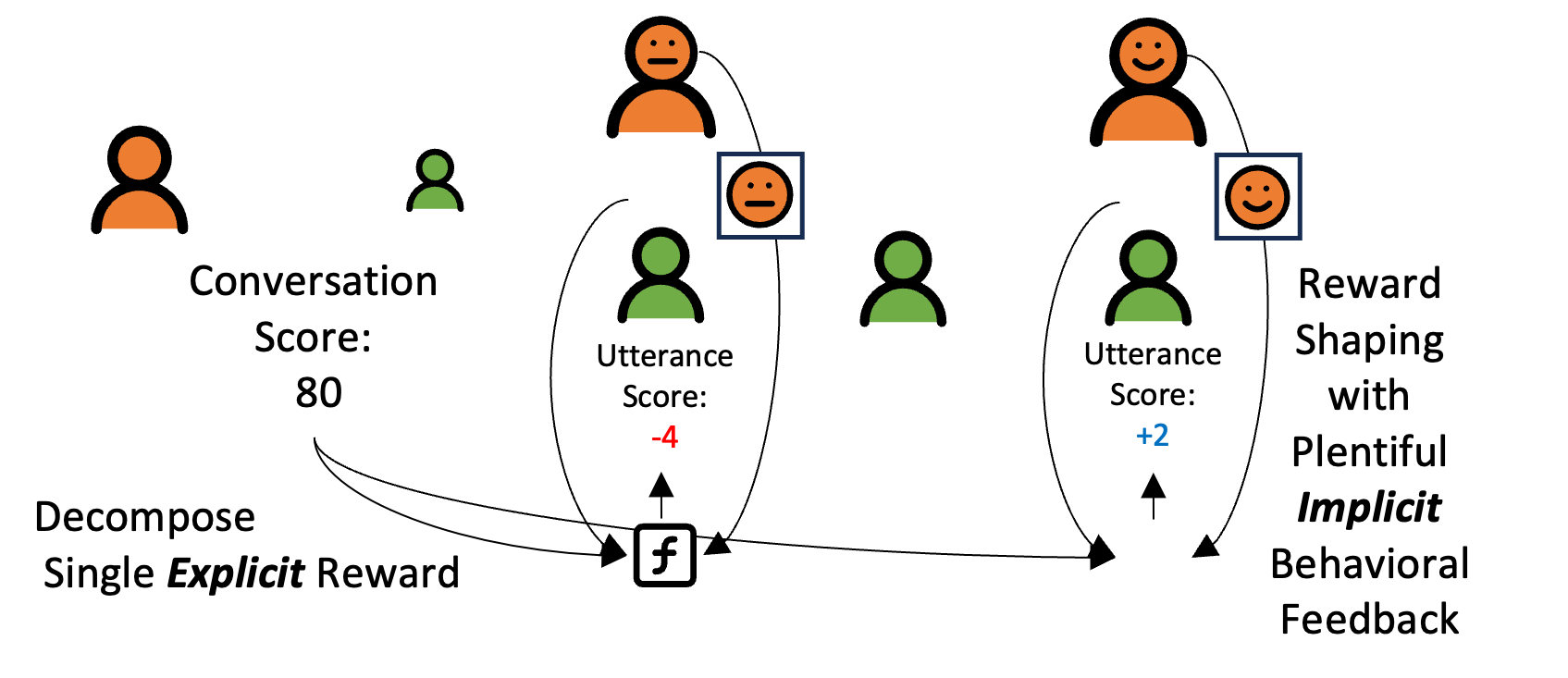

Dong Won Lee, Hae Won Park, Cynthia Breazeal, Louis-Philippe Morency, EMNLP (Findings) , 2025 paper We introduce a framework which uses a frozen large language model (LLM) to decompose global session-level feedback into fine-grained turn-level rewards for dialogue agents. Our method works in both text-only and multimodal settings (using cues like pitch and gaze), enabling reinforcement learning without dense supervision. The resulting reward models improve dialogue quality in human evaluations, showing that LLMs can effectively serve as general-purpose reward decomposers. |

|

Dong Won Lee, Yubin Kim, Denison Guvenoz, Sooyeon Jeong, Parker Malachowsky, Louis-Philippe Morency, Cynthia Breazeal, Hae Won Park In Submission , 2025 website / paper We introduce a large-scale collection of datasets of real-world human-robot interaction videos with 10K+ annotations to benchmark AI models' ability to identify and reason social interactions, providing a foundation for advancing socially intelligent AI. |

|

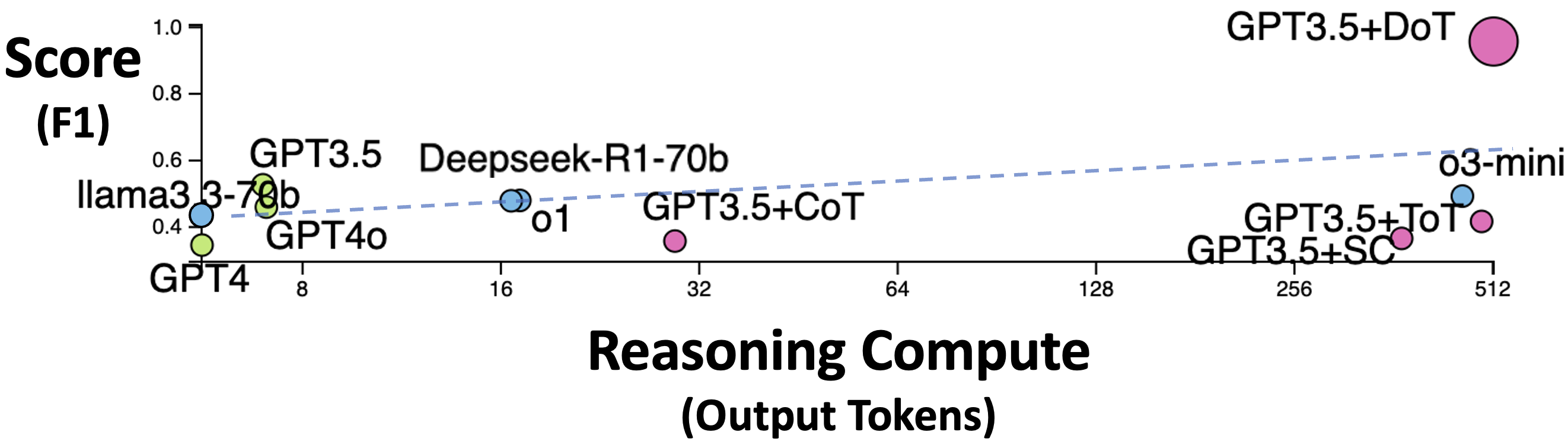

Yilin Qi*, Dong Won Lee*, Cynthia Breazeal, Hae Won Park ACL, NLP for Positive Impact Workshop, 2025 paper We show that augmenting older models like GPT-3.5 with reasoning strategies (e.g. DoT, CoT, Self-Consistency) outperforms state-of-the-art pre-trained models (e.g., DeepSeek-R1, o1) in recognizing and reframing unhelpful thoughts. |

|

Dong Won Lee, Hae Won Park, Yoon Kim, Cynthia Breazeal, Louis-Philippe Morency EMNLP , 2024 (Oral) paper / code / huggingface We introduce an approach named GELI, which automatically decomposes a single Global Explicit post-interaction score while incorporating Local Implicit feedback from multimodal signals to adapt a language model to become more conversational. |

|

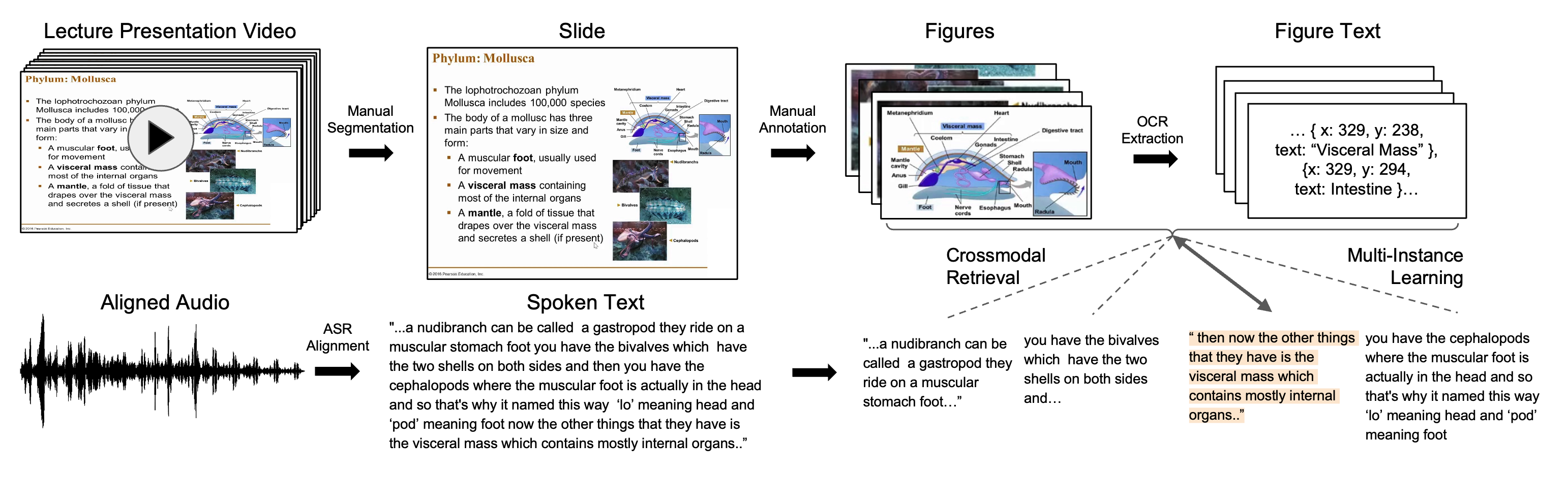

Dong Won Lee, Chaitanya Ahuja, Paul Pu Liang, Sanika Natu, Louis-Philippe Morency ICCV, 2023 paper / code We introduce the Multimodal Lecture Presentations dataset and PolyViLT a multimodal transformer trained with a multi-instance learning loss. We propose a large-scale benchmark testing the capabilities of machine learning models in multimodal understanding of educational content. |

|

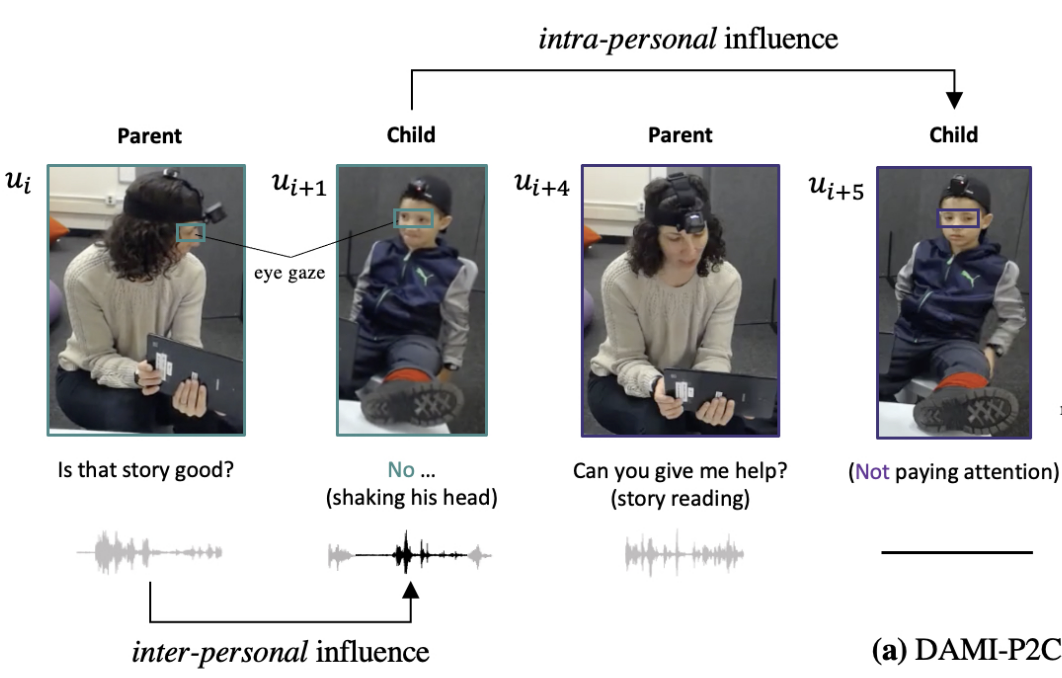

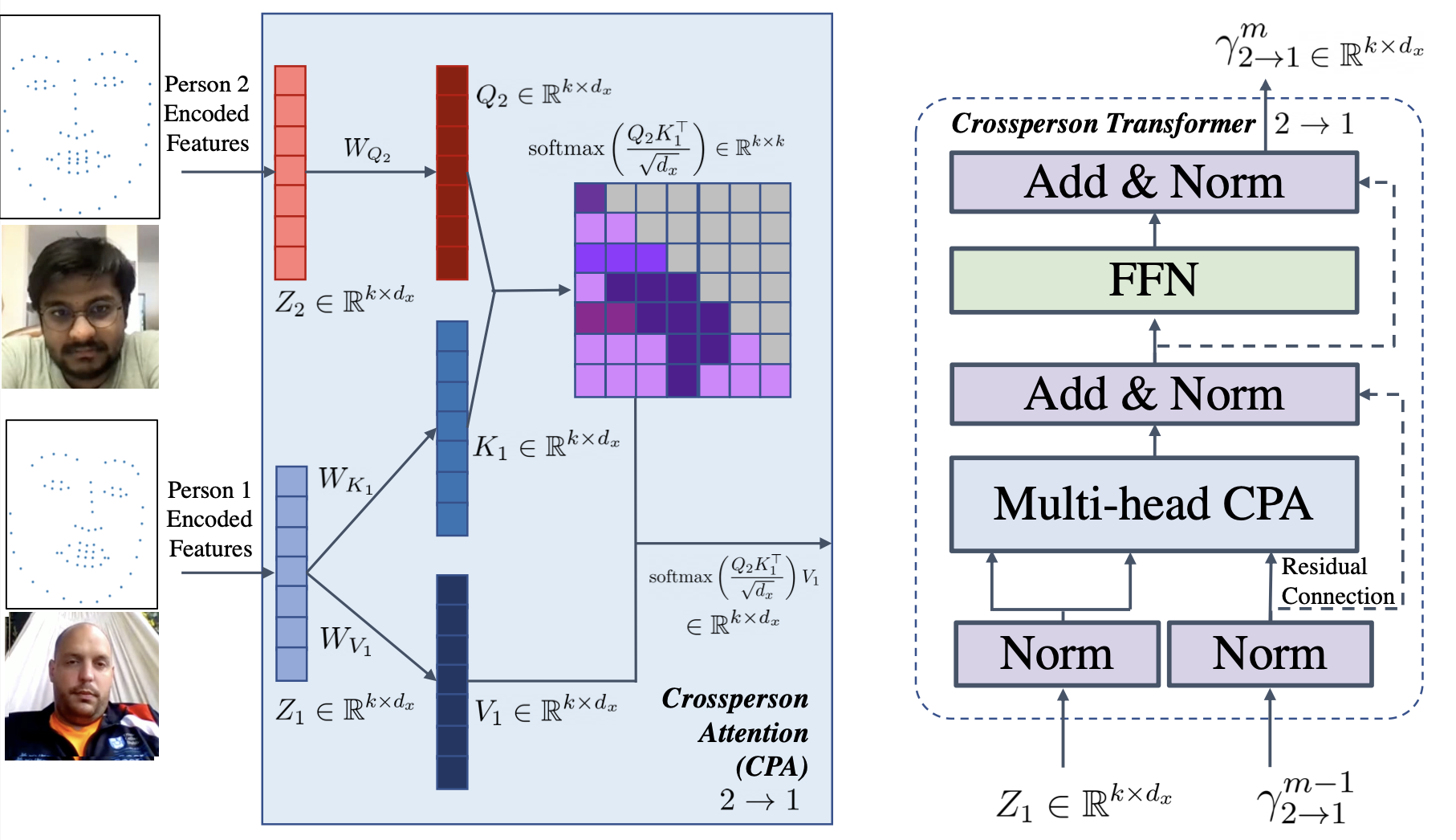

Yubin Kim, Dong Won Lee, Paul Pu Liang, Sharifa Algohwinem, Cynthia Breazeal, Hae Won Park ICMI, 2023 paper We model the Historical, Intra-and Inter-personal (HIINT) Dynamics in conversation by incorporating memory modules in the Cross-person Memory Transformer to address temporal coherence and better represent the context of conversational behaviors. |

|

Dong Won Lee, Yubin Kim, Rosalind Picard, Cynthia Breazeal, Hae Won Park IJCAI, 2023 (Oral) paper We introduce a new transformer architecture to model contingent behaviors in multiparty group conversations. |

|

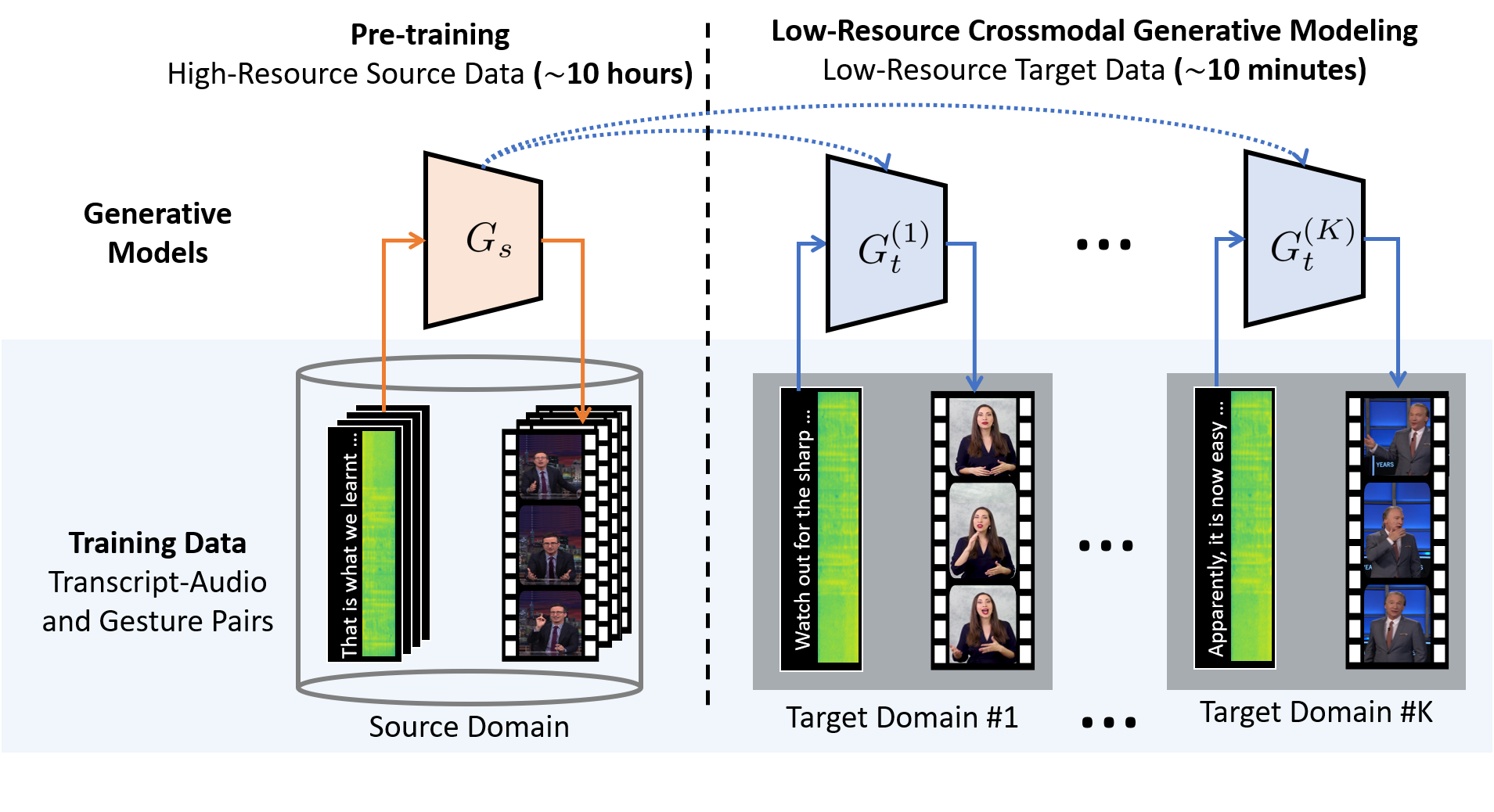

Chaitanya Ahuja, Dong Won Lee, Louis-Philippe Morency CVPR, 2022 paper / We propose a new approach in crossmodal generative modeling in low-resource settings in the hopes to to create a personalized gesture generation model (e.g. as part of a personalized avatar) with limited data from a new speaker. |

|

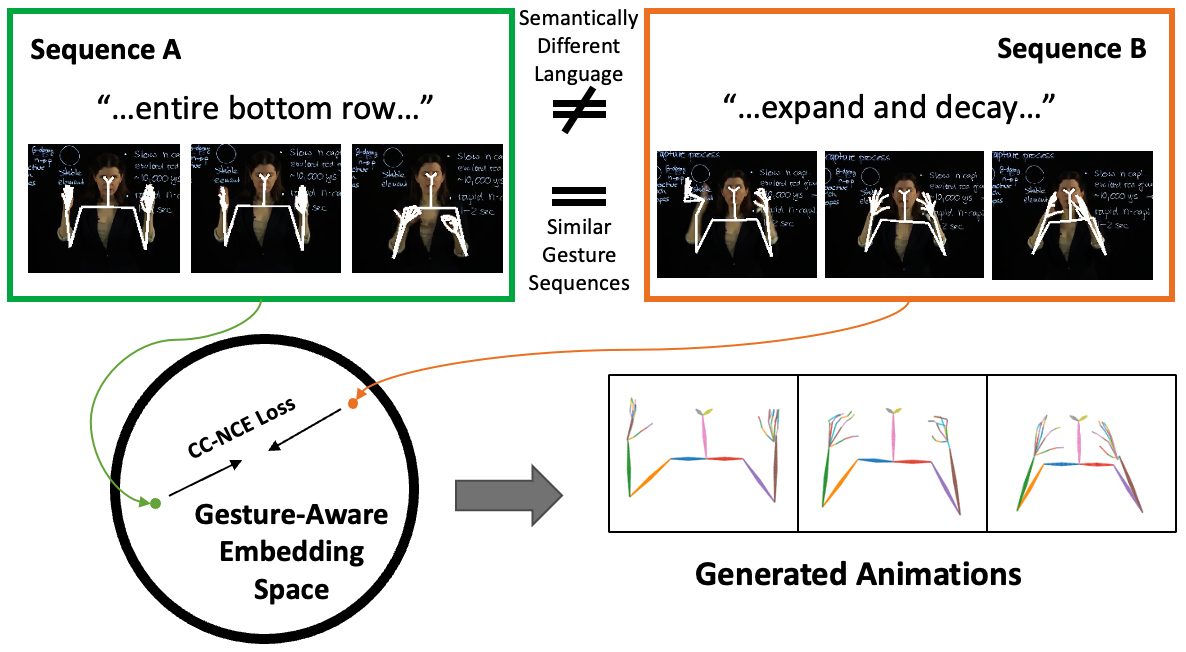

Dong Won Lee, Chaitanya Ahuja, Louis-Philippe Morency ICMI, GENEA Workshop, 2021 paper / presentation video / code We propose a new crossmodal contrastive learning loss to encourage a stronger grounding between gestures and spoken language. |

|

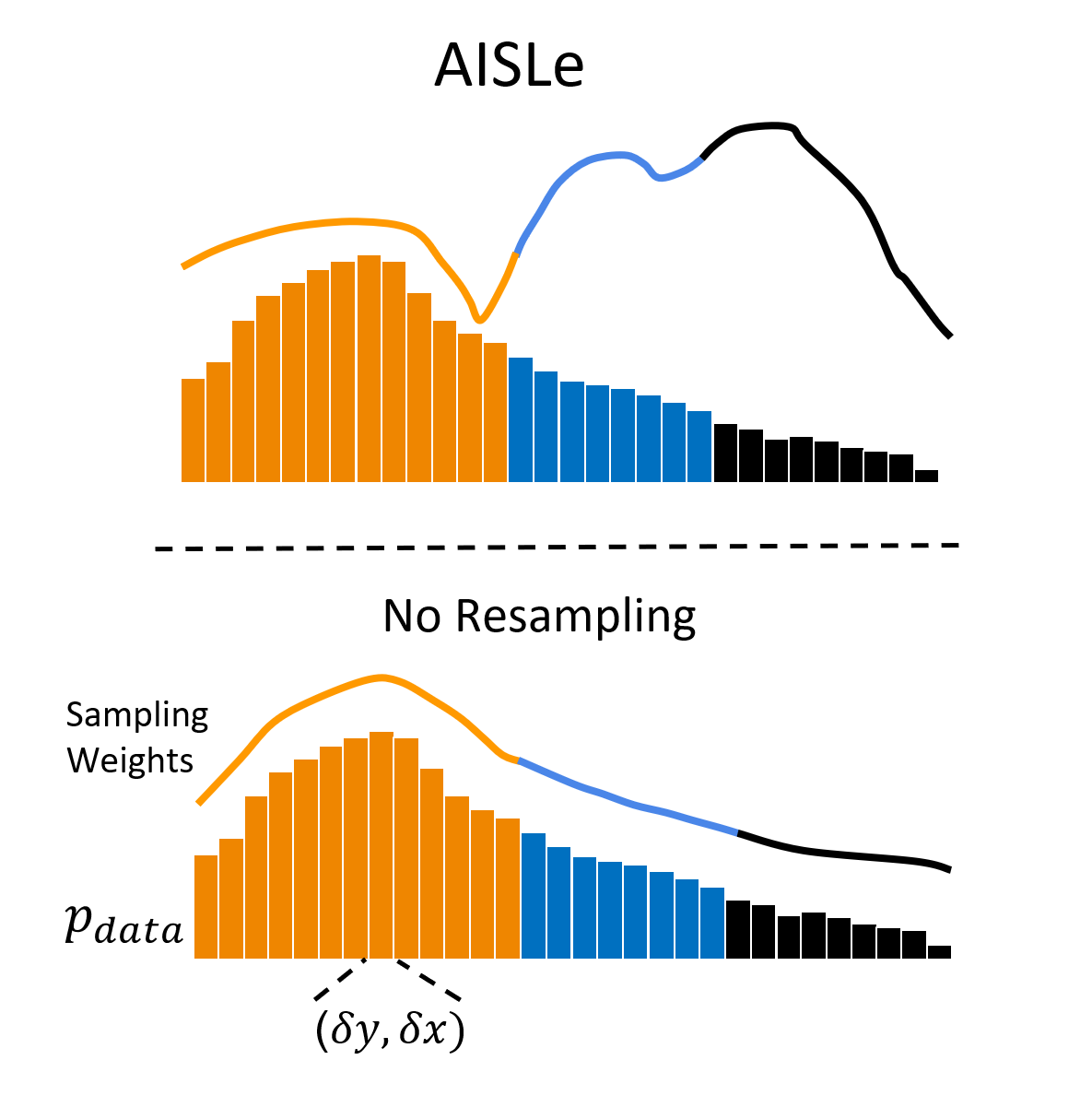

Chaitanya Ahuja, Dong Won Lee, Ryo Ishii, Louis-Philippe Morency EMNLP, Findings, 2020 paper / code We study relationships between spoken language and co-speech gestures to account for the long tail of text-gesture distribution. |

|

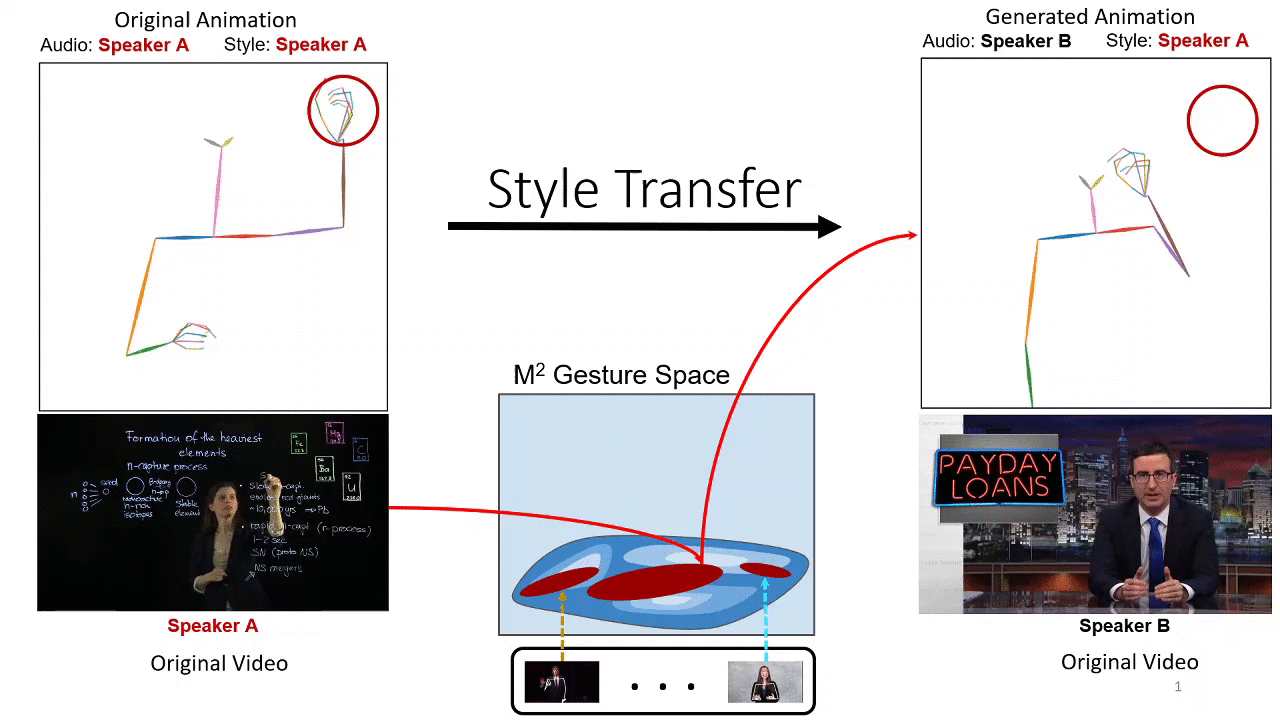

Chaitanya Ahuja, Dong Won Lee, Yukiko I. Nakano, Louis-Philippe Morency ECCV, 2020 project page / paper / code We propose a new style transfer model to learn individual styles of speaker's gestures. |

|

|

|

|

Chaitanya Ahuja, Dong Won Lee, Yukiko I. Nakano, Louis-Philippe Morency dataset page / download Link / code PATS was collected to study correlation of co-speech gestures with audio and text signals. The dataset consists of a diverse and large amount of aligned pose, audio and transcripts. |

|

|

|

Graduate TA, Fall 2023 |

|

Graduate TA, Spring 2022 |

|

Graduate TA, Spring 2021 |

|

Undergraduate TA, Fall 2019, Spring 2020, Fall 2020 (3 Semesters) |

|

Undergraduate TA, Fall 2020, Spring 2021 (2 Semesters) |

|

|

|

Reviewer |

|

Co-Organizing Chair workshop page |

|

Publication Chair workshop page / video |

|

Reviewer |

|

|

|

Previously, I had the incredible opportunity to be a member of a South Korean special operations unit deployed to Abu Dhabi (AKH14). Find me here: photo |

|

06/2024: EMNLP 2024 |

|

I have been blessed to meet amazing mentors who have guided me to become a better researcher: Mentors and Advisors: (in Alphabetical Order)

|

|

Website Credits Here: source code |